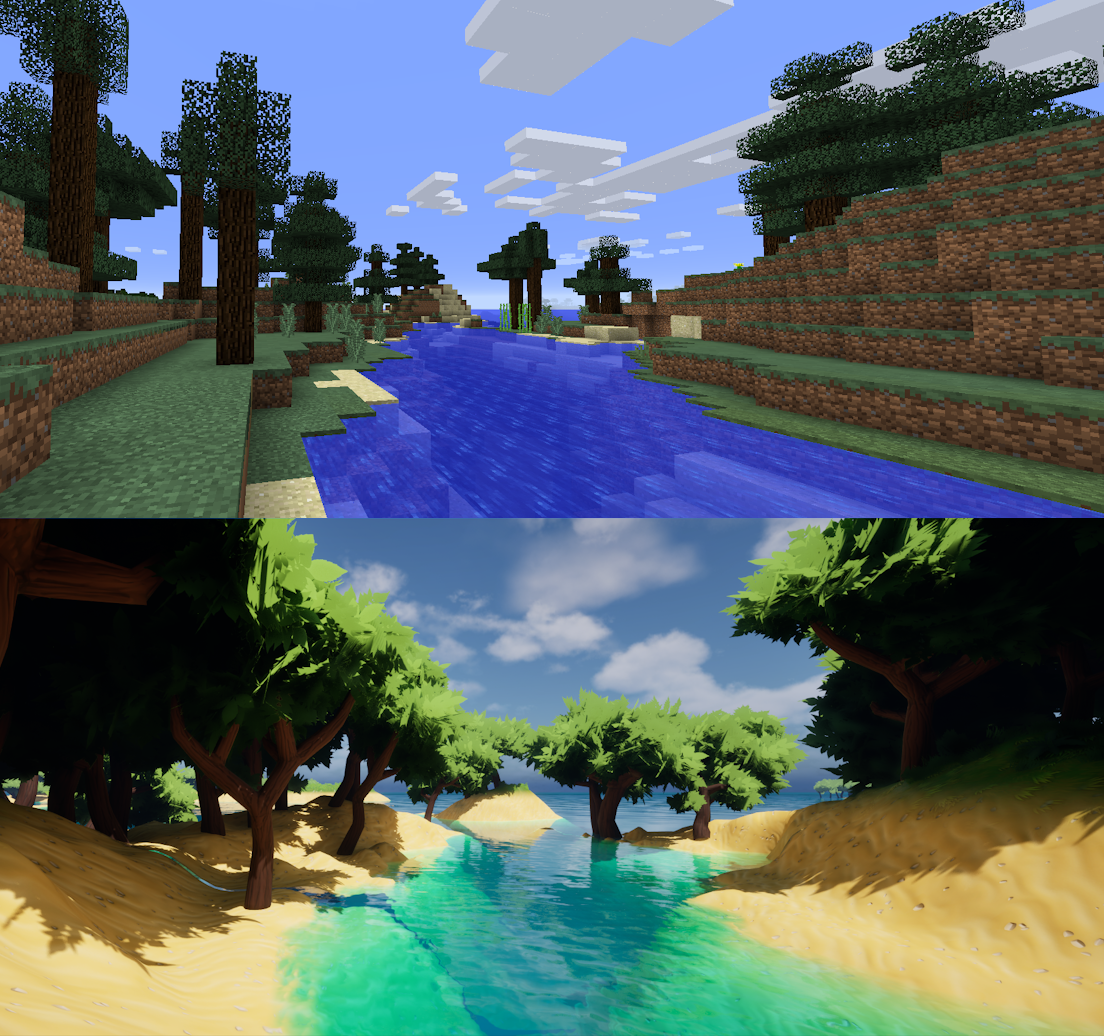

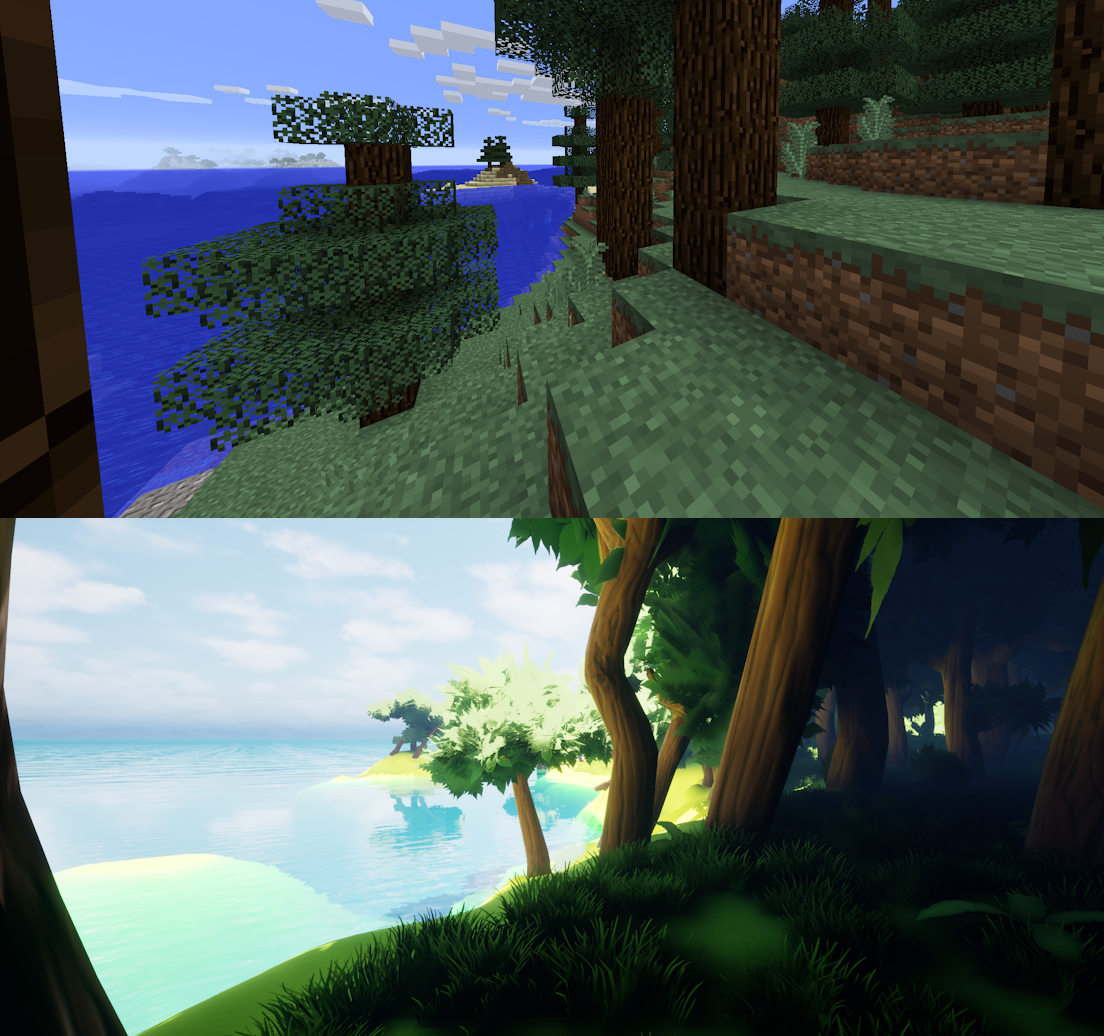

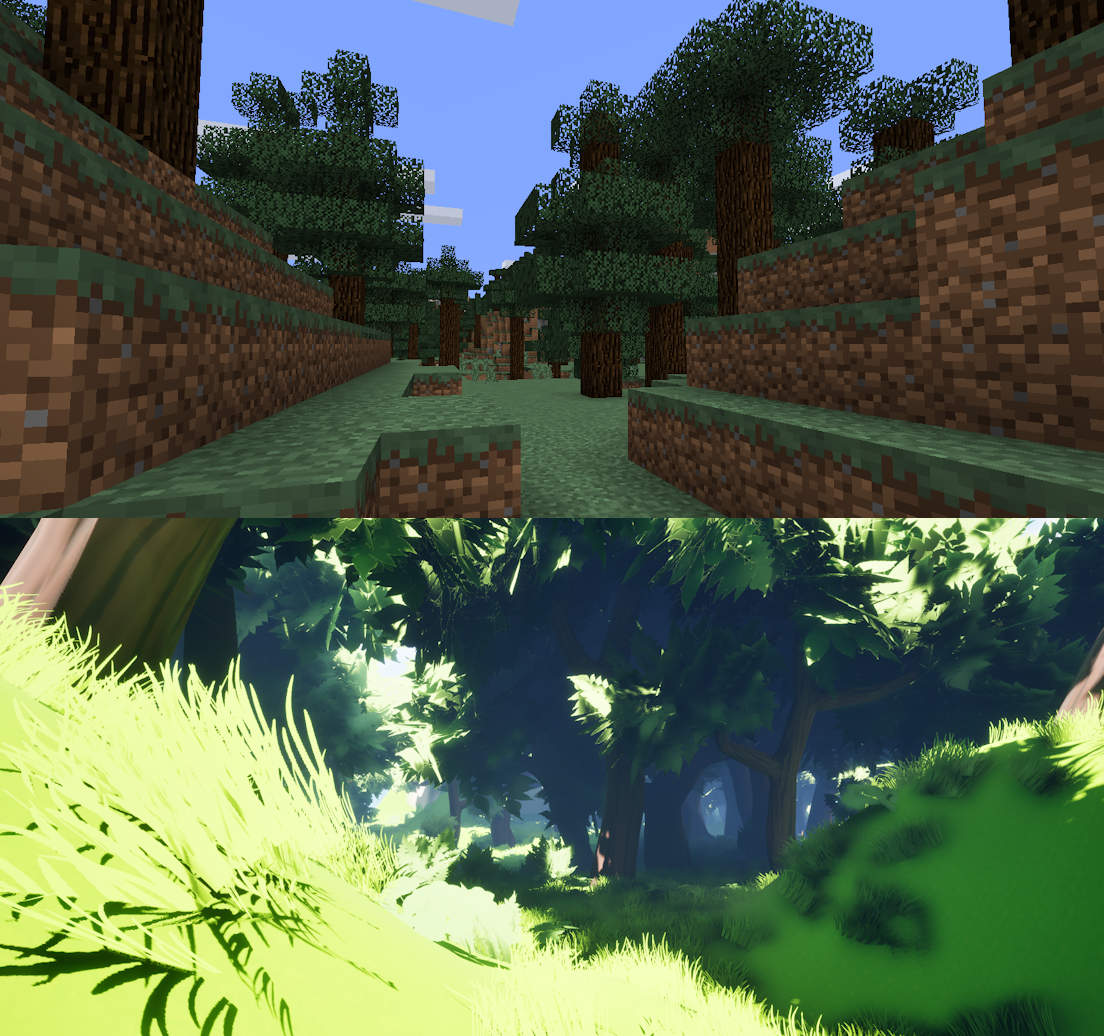

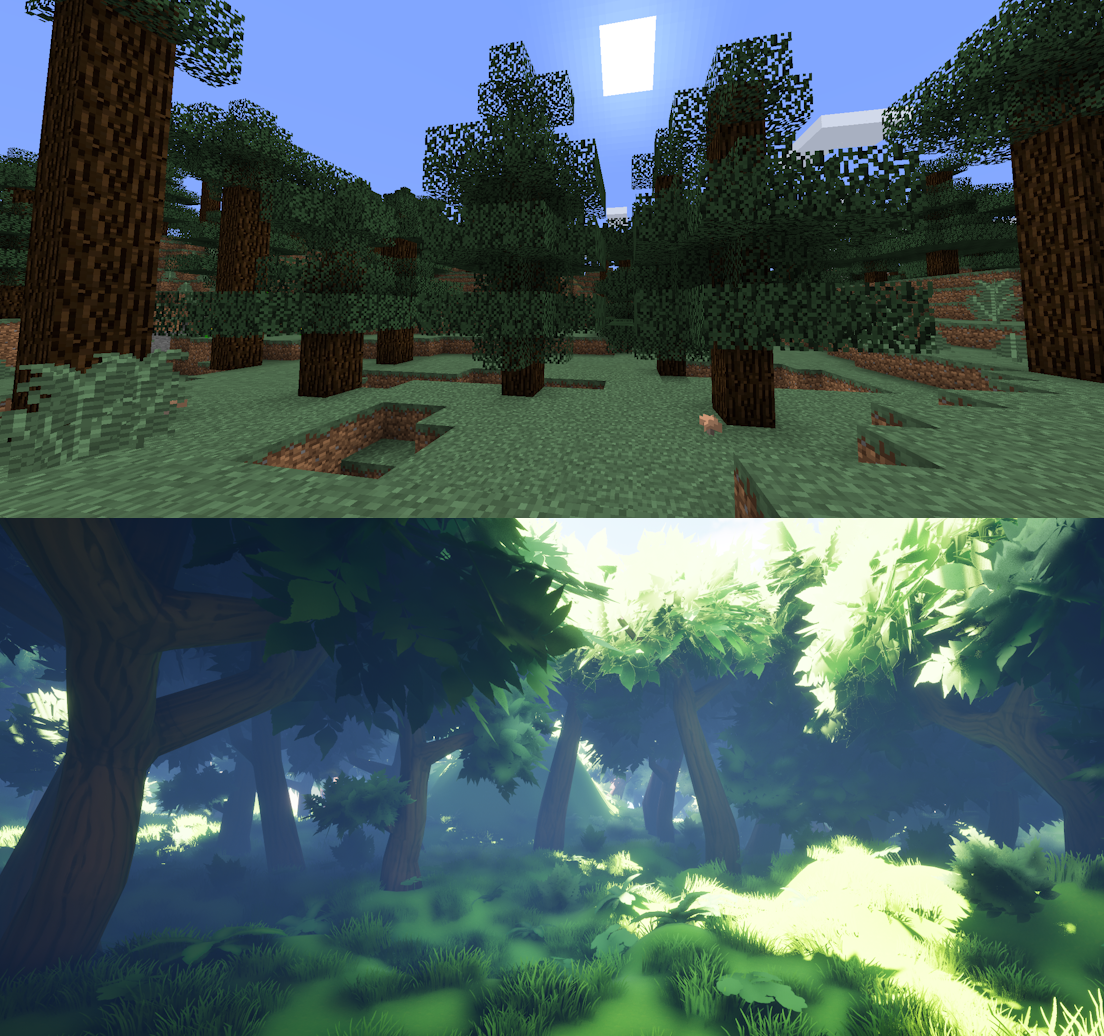

We introduce Minecraft to 3D, a novel pipeline that automatically converts any Minecraft build into a production‑ready polygonal scene. A 3D convolutional network recognises Minecraft’s default objects, the block surface is resampled into a smooth height‑map, and each recognised object is substituted with a high‑quality 3D model chosen from an external library. Object locations, orientations, and tags are preserved, a separate water plane is exported for engine‑level ocean rendering, and the final scene opens natively in modern 3D engines. The pipeline processes a one‑square‑kilometre world in under three minutes on a single consumer GPU, enabling educators, indie developers, and artists to move rapidly from voxel sketches to fully lit environments.

Voxel data are streamed in 256 × 256‑block tiles with a 20‑block overlap so that structures crossing region boundaries retain context. A 3D U‑Net trained on Minecraft’s canonical assets—oak trees, villager houses, desert temples, and related variants—assigns a semantic label to every object. The network achieves 97.8 % mean intersection‑over‑union (mIoU) on isolated structures and 88.6 % where multiple structures intersect.

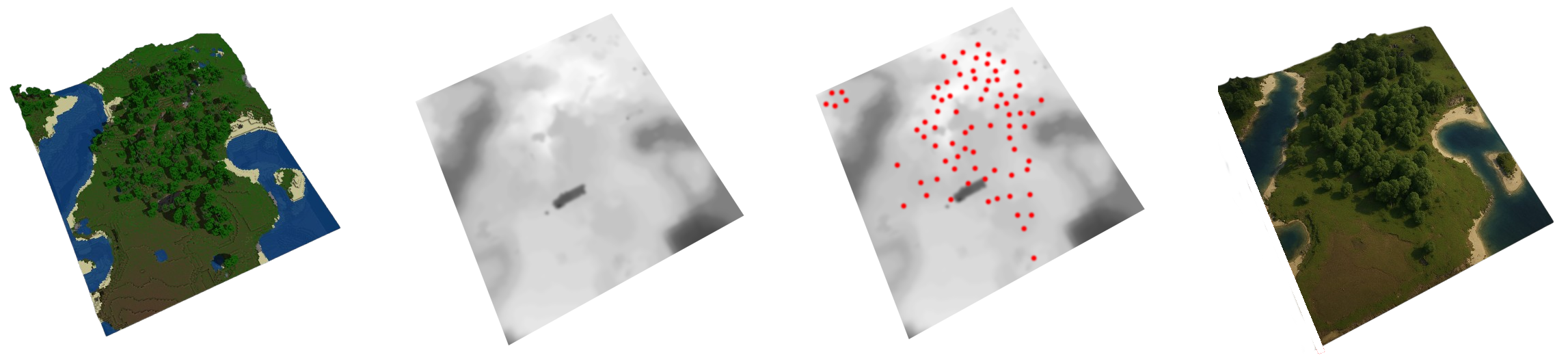

After segmentation, non‑terrain voxels are hidden, the stepped surface is up‑sampled with trilinear interpolation, and an anisotropic Gaussian filter removes staircase artefacts. Tiles are cropped to their original bounds, then merged with a Poisson blend; the blend suppresses seams without disturbing coarse relief.

Recognised objects are substituted next. The object list drives a query against an external model library, returning high‑quality geometry that matches class, scale, and broad proportions. Every model is rigidly aligned and normalised to the local ground patch so that it sits flush with the terrain. The water layer is exported separately as a flat mesh at the recorded sea level, and engines can replace it with their own ocean shaders or leave it intact for stylised rendering.

Processing a 1 km² map—about 65 million blocks—takes 147 s on an RTX 4090 and never exceeds 3.2 GB of system memory thanks to a sparse‑voxel octree. CNN inference accounts for 84 % of that time; the remainder is height‑map synthesis, Poisson blending, and model placement. Exported scenes open in Blender 4.1, Unreal 5.4, Unity 2023 LTS, and Godot 4.3 without missing geometry, inverted normals, or material errors.

Heavy smoothing erases the blocky staircase that defines Minecraft but also removes fine detail—individual steps and decorative block patterns flatten into subtle slopes. Lowering the filter strength restores detail at the cost of bumpier terrain, which can feel awkward for first‑person navigation. The pipeline handles only the default block set; custom or modded blocks fall back to “terrain” and receive no model substitution. Intersecting structures confuse the network, occasionally merging two labels and picking the wrong replacement.

Indie studios can prototype a level in Minecraft, run it through our pipeline, and continue in any game engine with their own art assets. A 3D artist who wants a quick starting scene can build the rough layout in Minecraft, press export, and spend the saved hours on lighting and shading instead of manual modelling. Educators can generate cinematic fly‑throughs of student projects, and virtual‑production teams can move collaboratively designed block sets onto LED stages with minimal hand‑off.

We are training on community‑created structures to extend beyond the default asset set, and we are testing on‑demand AI‑generated geometry so that a substituted model can match style prompts or concept art. Additional work targets an adaptive smoothing filter that keeps critical block detail while still eliminating visible staircases.

@unpublished{lewis2025mc3d,

author = {Lewis, Sean Hardesty},

title = {Minecraft to 3D: A Pipeline for High-Fidelity Reconstruction of Minecraft Worlds},

booktitle = {Proceedings of SIGGRAPH Posters 2025},

year = {2025},

doi = {}

}